Introduction

Who am I?

A portrait of the author in the form he will assume over the course of this project, having returned to our time to warn his present self against pursuing this course of action.

My name is Nathan Douglas. The best source of information about my electronic life is probably my GitHub profile. It almost certainly would not be my LinkedIn profile. I also have a blog about non-computer-related stuff here.

What Do I Do?

The author in his eventual form advising the author in his present form not to do the thing, and why.

The author in his eventual form advising the author in his present form not to do the thing, and why.

I've been trying to get computers to do what I want, with mixed success, since the early mid-nineties. I earned my Bachelor's in Computer Science from the University of Nevada at Las Vegas in 2011, and I've been working as a software/DevOps engineer ever since, depending on gig.

I consider myself a DevOps Engineer. I consider DevOps a methodology and a role, in that I try to work in whatever capacity I can to improve the product delivery lifecycle and shorten delivery lead time. I generally do the work that is referred to as "DevOps" or "platform engineering" or "site reliability engineering", but I try to emphasize the theoretical aspects, e.g. Lean Management, sytems thinking, etc. That's not to say that I'm an expert, but just that I try to keep the technical details grounded in the philosophical justifications, the big picture.

Background

"What would you do if you had an AMD K6-2 333MHz and 96MB RAM?" "I'd run two copies of Windows 98, my dude."

At some point in the very early 00's, I believe, I first encountered VMWare and the idea that I could run a computer inside of another computer. That wasn't the first time I'd encountered a virtual machine -- I'd played with Java in the '90's, and played Zork and other Infocom and Inform games -- but it might've been the first time that I really understood the idea.

And I made use of it. For a long time – most of my twenties – I was occupied by a writing project. I maintained a virtual machine that ran a LAMP server and hosted various content management systems and related technologies: raw HTML pages, MediaWiki, DokuWiki, Drupal, etc, all to organize my thoughts on this and other projects. Along the way, I learned a whole lot about this sort of deployment: namely, that it was a pain in the ass.

I finally abandoned that writing project around the time Docker came out. I immediately understood what it was: a less tedious VM. (Admittedly, my understanding was not that sophisticated.) I built a decent set of skills with Docker and used it wherever I could. I thought Docker was about as good as it got.

At some point around 2016 or 2017, I became aware of Kubernetes. I immediately built a 4-node cluster with old PCs, doing a version of Kubernetes the Hard Way on bare metal, and then shifted to a custom system with four VMWare VMs that PXE booted, setup a CoreOS configuration with Ignition and what was then called Matchbox, and formed into a self-healing cluster with some neat toys like GlusterFS, etc. Eventually, though, I started neglecting the cluster and tore it down.

Around 2021, my teammates and I started considering a Kubernetes-based infrastructure for our applications, so I got back into it. I set up a rather complicated infrastructure on a three-node Proxmox VE cluster that would create three three-node Kubernetes clusters using LXC containers. From there I explored ArgoCD and GitOps and Helm and some other things that I hadn't really played with before. But again, my interest waned and the cluster didn't actually get much action.

A large part of this, I think, is that I didn't trust it to run high-FAF (Family Acceptance Factor) apps, like Plex, etc. After all, this was supposed to be a cluster I could tinker with, and tear down and destroy and rebuild at any time with a moment's notice. So in practice, this ended up being a toy cluster.

And while I'd gone through Kubernetes the Hard Way (twice!), I got the irritating feeling that I hadn't really learned all that much. I'd done Linux From Scratch, and had run Gentoo for several years, so I was no stranger to the idea of following a painfully manual process filled with shell commands and waiting for days for my computer to be useful again. And I did learn a lot from all three projects, but, for whatever reason, it didn't stick all that well.

Motivation

In late 2023, my team's contract concluded, and there was a possibility I might be laid off. My employer quickly offered me a position on another team, which I happily and gratefully accepted, but I had already applied to several other positions. I had some promising paths forward, but... not as many as I would like. It was an unnerving experience.

Not everyone is using Kubernetes, of course, but it's an increasingly essential skill in my field. There are other skills I have – Ansible, Terraform, Linux system administration, etc – but I'm not entirely comfortable with my knowledge of Kubernetes, so I'd like to deepen and broaden that as effectively as possible.

Goals

I want to get really good at Kubernetes. Not just administering it, but having a good understanding of what is going on under the hood at any point, and how best to inspect and troubleshoot and repair a cluster.

I want to have a fertile playground for experimenting; something that is not used for other purposes, not expected to be stable, ideally not even accessed by anyone else. Something I can do the DevOps equivalent of destroy with an axe, without consequences.

I want to document everything I've learned exhaustively. I don't want to take a command for granted, or copy and paste, or even copying and pasting after nodding thoughtfully at a wall of text. I want to embed things deeply into my thiccc skull.

Generally, I want to be beyond prepared for my CKA, CKAD, and CKS certification exams. I hate test anxiety. I hate feeling like there are gaps in my knowledge. I want to go in confident, and I want my employers and teammates to be confident of my abilities.

Approach

This is largely going to consist of me reading documentation and banging my head against the wall. I'll provide links to the relevant information, and type out the commands, but I also want to persist this in Infrastructure-as-Code. Consequently, I'll link to Ansible tasks/roles/playbooks for each task as well.

Cluster Hardware

I went with a PicoCluster 10H. I'm well aware that I could've cobbled something together and spent much less money; I have indeed done the thing with a bunch of Raspberry Pis screwed to a board and plugged into an Anker USB charger and a TP-Link switch.

I didn't want to do that again, though. For one, I've experienced problems with USB chargers seeming to lose power over time, and some small switches getting flaky when powered from USB. I liked the power supply of the PicoCluster and its cooling configuration. I liked that it did pretty much exactly what I wanted, and if I had problems I could yell at someone else about it rather than getting derailed by hardware rabbit holes.

I also purchased ten large heatsinks with fans, specifically these. There were others I liked a bit more, and these interfered with the standoffs that were used to build each stack of five Raspberry Pis, but these seemed as though they would likely be the most reliable in the long run.

I purchased SanDisk 128GB Extreme microSDXC cards for local storage. I've been using SanDisk cards for years with no significant issues or complaints.

The individual nodes are Raspberry Pi 4B/8GB. As of the time I'm writing this, Raspberry Pi 5s are out, and they offer very substantial benefits over the 4B. That said, they also have higher energy consumption, lower availability, and so forth. I'm opting for a lower likelihood of surprises because, again, I just don't want to spend much time dealing with hardware and I don't expect performance to hinder me.

Frequently Asked Questions

So, how do you like the PicoCluster so far?

I have no complaints. Putting it together was straightforward; the documentation was great, everything was labeled correctly, etc. Cooling seems very adequate and performance and appearance are perfect.

Have you considered adding SSDs for mass storage?

Yes, and I have some cables and spare SSDs for doing so. I'm not sure if I actually will. We'll see.

Meet the Nodes

It's generally frowned upon nowadays to treat servers like "pets" as opposed to "cattle". And, indeed, I'm trying not to personify these little guys too much, but... you can have my custom MOTD, hostnames, and prompts when you pry them from my cold, dead fingers.

The nodes are identified with a letter A-J and labeled accordingly on the ethernet port so that if one needs to be replaced or repaired, that can be done with a minimum of confusion. Then, I gave each the name of a noble house from A Song of Ice and Fire and gave it a MOTD (based on the coat of arms) and a themed Bash prompt.

In my experience, when I'm working in multiple servers simultaneously, it's good for me to have a bright warning sign letting me know, as unambiguously as possible, what server I'm actually logged in on. (I've never blown up prod thinking it was staging, but if I'm shelled into prod I'm deeply concerned about that possibility)

This is just me being a bit over-the-top, I guess.

✋ Allyrion

🐞 Bettley

🦢 Cargyll

🍋 Dalt

🦩 Erenford

🌺 Fenn

🧤 Gardener

🌳 Harlton

🏁 Inchfield

🦁 Jast

Node Configuration

After physically installing and setting up the nodes, the next step is to perform basic configuration. You can see the Ansible playbook I use for this, which currently runs the following roles:

goldentooth.configure:- Set timezone; last thing I need to do when working with computers is having to perform arithmetic on times and dates.

- Set keybord layout; this should be set already, but I want to be sure.

- Enable overclocking; I've installed an adequate cooling system to support the Pis running full-throttle at their full spec clock.

- Enable fan control; the heatsinks I've installed include fans to prevent CPU throttling under heavy load.

- Enable and configure certain cgroups; this allows Kubernetes to manage and limit resources on the system.

cpuset: This is used to manage the assignment of individual CPUs (both physical and logical) and memory nodes to tasks running in a cgroup. It allows for pinning processes to specific CPUs and memory nodes, which can be very useful in a containerized environment for performance tuning and ensuring that certain processes have dedicated CPU time. Kubernetes can use cpuset to ensure that workloads (containers/pods) have dedicated processing resources. This is particularly important in multi-tenant environments or when running workloads that require guaranteed CPU cycles. By controlling CPU affinity and ensuring that processes are not competing for CPU time, Kubernetes can improve the predictability and efficiency of applications.memory: This is used to limit the amount of memory that tasks in a cgroup can use. This includes both RAM and swap space. It provides mechanisms to monitor memory usage and enforce hard or soft limits on the memory available to processes. When a limit is reached, the cgroup can trigger OOM (Out of Memory) killer to select and kill processes exceeding their allocation. Kubernetes uses the memory cgroup to enforce memory limits specified for pods and containers, preventing a single workload from consuming all available memory, which could lead to system instability or affect other workloads. It allows for better resource isolation, efficient use of system resources, and ensures that applications adhere to their specified resource limits, promoting fairness and reliability.hugetlb: This is used to manage huge pages, a feature of modern operating systems that allows the allocation of memory in larger blocks (huge pages) compared to standard page sizes. This can significantly improve performance for certain workloads by reducing the overhead of page translation and increasing TLB (Translation Lookaside Buffer) hits. Some applications, particularly those dealing with large datasets or high-performance computing tasks, can benefit significantly from using huge pages. Kubernetes can use it to allocate huge pages to these workloads, improving performance and efficiency. This is not going to be a concern for my use, but I'm enabling it anyway simply because it's recommended.

- Disable swap. Kubernetes doesn't like swap by default, and although this can be worked around, I'd prefer to avoid swapping on SD cards. I don't really expect a high memory pressure condition anyway.

- Set preferred editor; I like

nano, although I can (after years of practice) safely and reliably exitvi. - Set certain kernel modules to load at boot:

overlay: This supports OverlayFS, a type of union filesystem. It allows one filesystem to be overlaid on top of another, combining their contents. In the context of containers, OverlayFS can be used to create a layered filesystem that combines multiple layers into a single view, making it efficient to manage container images and writable container layers.br_netfilter: This allows bridged network traffic to be filtered by iptables and ip6tables. This is essential for implementing network policies, including those related to Network Address Translation (NAT), port forwarding, and traffic filtering. Kubernetes uses it to enforce network policies that control ingress and egress traffic to pods and between pods. This is crucial for maintaining the security and isolation of containerized applications. It also enables the necessary manipulation of traffic for services to direct traffic to pods, and for pods to communicate with each other and the outside world. This includes the implementation of services, load balancing, and NAT for pod networking. And by allowing iptables to filter bridged traffic, br_netfilter helps Kubernetes manage network traffic more efficiently, ensuring consistent network performance and reliability across the cluster.

- Load above kernel modules on every boot.

- Set some kernel parameters:

net.bridge.bridge-nf-call-iptables: This allows iptables to inspect and manipulate the traffic that passes through a Linux bridge. A bridge is a way to connect two network segments, acting somewhat like a virtual network switch. When enabled, it allows iptables rules to be applied to traffic coming in or going out of a bridge, effectively enabling network policies, NAT, and other iptables-based functionalities for bridged traffic. This is essential in Kubernetes for implementing network policies that control access to and from pods running on the same node, ensuring the necessary level of network isolation and security.net.bridge.bridge-nf-call-ip6tables: As above, but for IPv6 traffic.net.ipv4.ip_forward: This controls the ability of the Linux kernel to forward IP packets from one network interface to another, a fundamental capability for any router or gateway. Enabling IP forwarding is crucial for a node to route traffic between pods, across different nodes, or between pods and the external network. It allows the node to act as a forwarder or router, which is essential for the connectivity of pods across the cluster, service exposure, and for pods to access the internet or external resources when necessary.

- Add SSH public key to

root's authorized keys; this is already performed for my normal user by Raspberry Pi Imager.

goldentooth.set_hostname: Set the hostname of the node (including a line in/etc/hosts). This doesn't need to be a separate role, obviously. I just like the structure as I have it.goldentooth.set_motd: Set the MotD, as described in the previous chapter.goldentooth.set_bash_prompt: Set the Bash prompt, as described in the previous chapter.goldentooth.setup_security: Some basic security configuration. Currently, this just uses Jeff Geerling'sansible-role-securityto perform some basic tasks, like setting up unattended upgrades, etc, but I might expand this in the future.

Raspberry Pi Imager doesn't allow you to specify an SSH key for the root user, so I do this in goldentooth.configure. However, I also have Kubespray installed (for when I want things to Just Work™), and Kubespray expects the remote user to be root. As a result, I specify that the remote user is my normal user account in the configure_cluster playbook. This means a lot of become: true in the roles, but I would prefer eventually to ditch Kubespray and disallow root login via SSH.

Anyway, we need to rerun goldentooth.set_bash_prompt, but as the root user. This almost never matters, since I prefer to SSH as a normal user and use sudo, but I like my prompts and you can't take them away from me.

With the nodes configured, we can start talking about the different roles they will serve.

Cluster Roles and Responsibilities

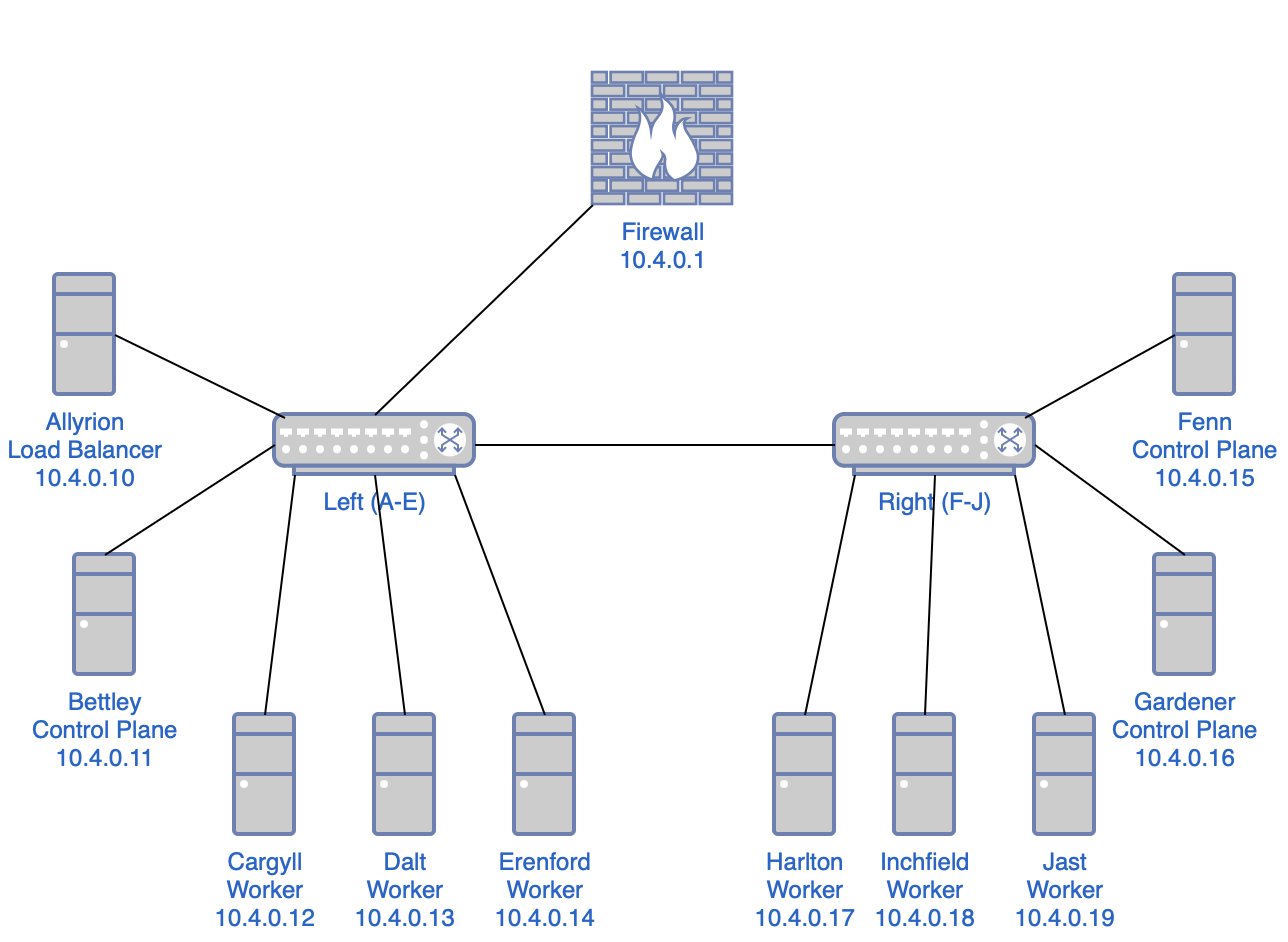

Observations:

- The cluster has a single power supply but two power distribution units (PDUs) and two network switches, so it seems reasonable to segment the cluster into left and right halves.

- I want high availability, which requires a control plane capable of a quorum, so a minimum of three nodes in the control plane.

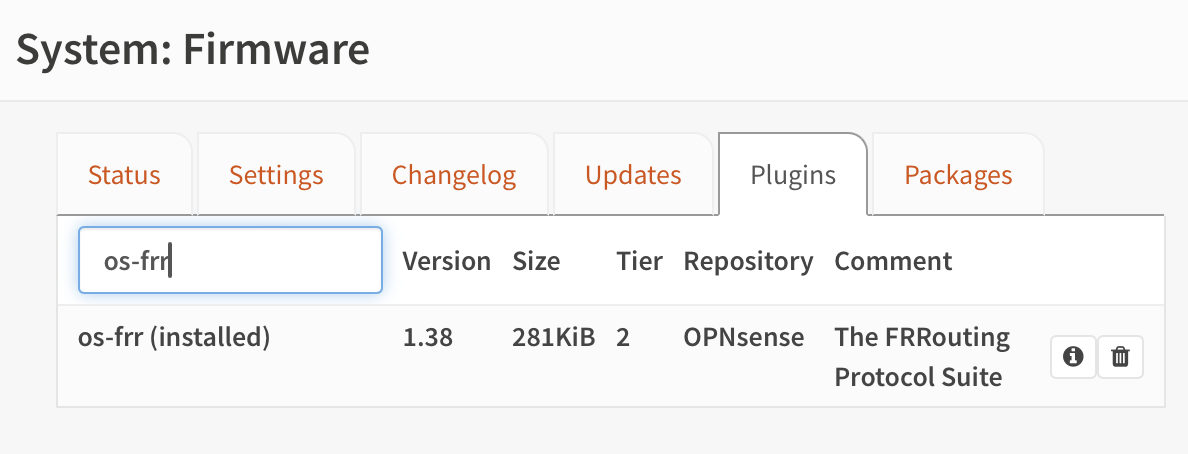

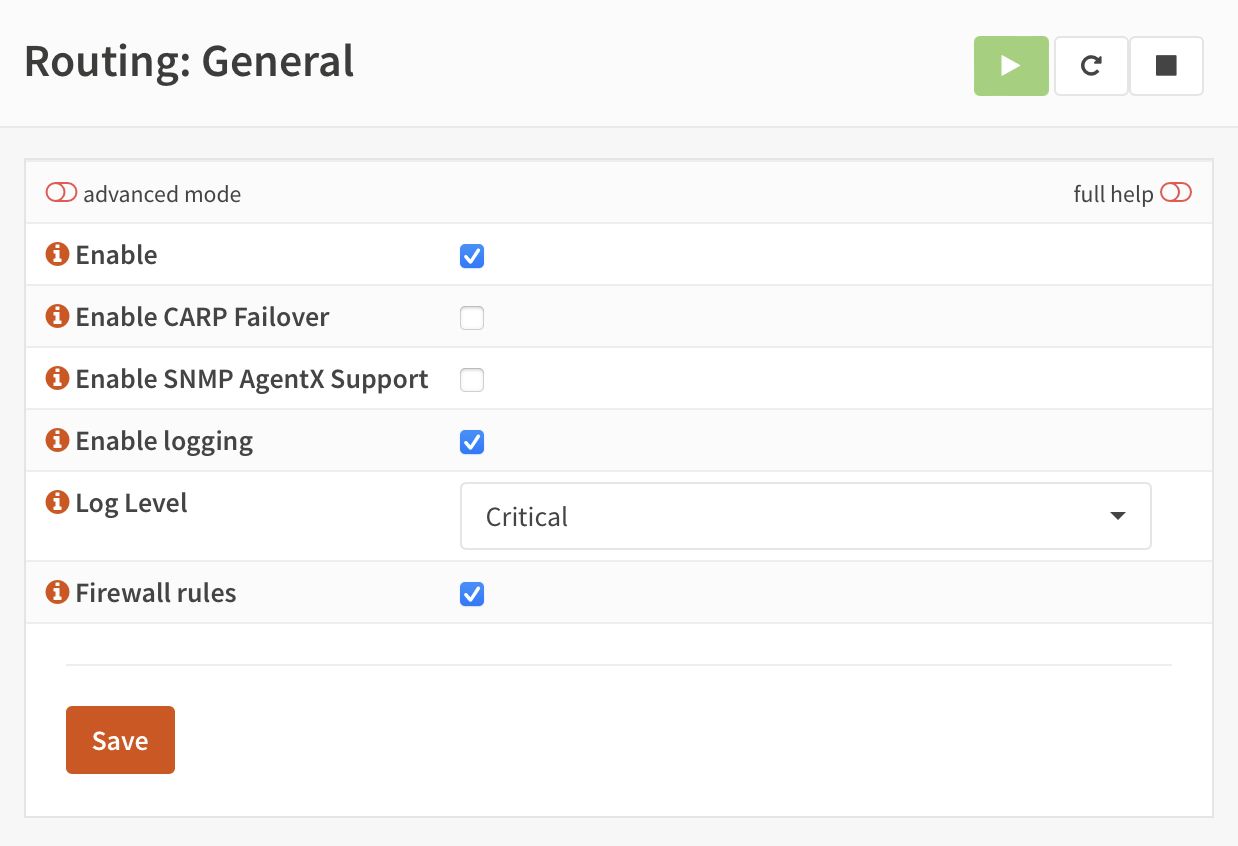

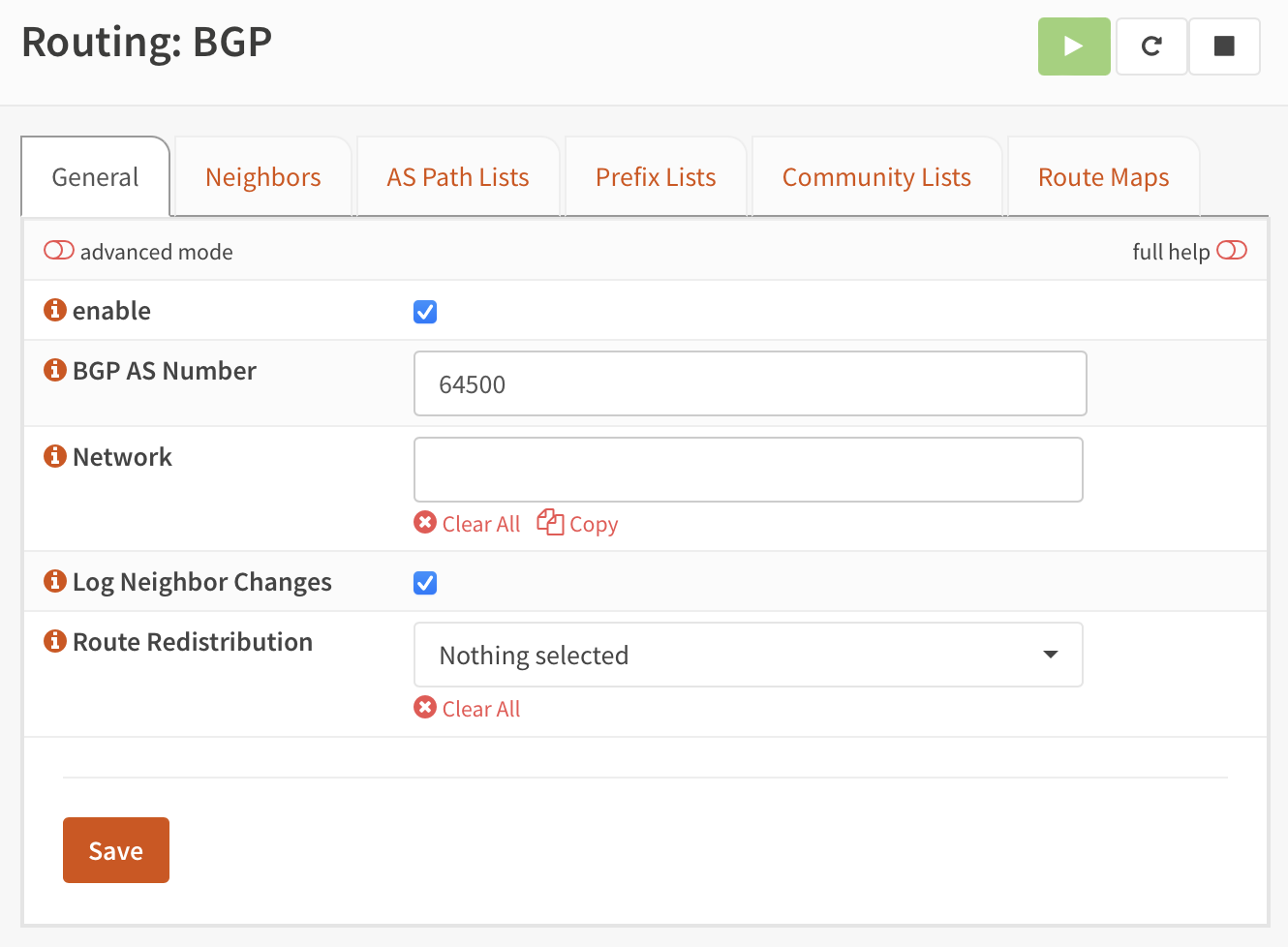

- I want to use a dedicated external load balancer for the control plane rather than configure my existing Opnsense firewall/router. (I'll have to do that to enable MetalLB via BGP, sadly.)

- So that would yield one load balancer, three control plane nodes, and six worker nodes.

- With the left-right segmentation, I can locate one load balancer and one control plane node on the left side, two control plane nodes on the right side, and three worker nodes on each side.

This isn't really high-availability; the cluster has multiple single points of failure:

- the load balancer node

- whichever network switch is connected to the upstream

- the power supply

- the PDU powering the LB

- the PDU powering the upstream switch

- etc.

That said, I find those acceptable given the nature of this project.

Load Balancer

Allyrion, the first node alphabetically and the top node on the left side, will run a load balancer. I had a number of options here, but I ended up going with HAProxy. HAProxy was my introduction to load balancing, reverse proxying, and so forth, and I have kind of a soft spot for it.

I'd also considered Traefik, which I use elsewhere in my homelab, but I believe I'll use it as an ingress controller. Similarly, I think I prefer to use Nginx on a per-application level. I'm pursuing this project first and foremost to learn and to document my learning, and I'd prefer to cover as much ground as possible, and as clearly as possible, and I believe I can do this best if I don't have to worry about having to specify which installation of $proxy I'm referring to at any given time.

So:

- HAProxy: Load balancer

- Traefik: Ingress controller

- Nginx: Miscellaneous

Control Plane

Bettley (the second node on the left side), Gardener, and Harlton (the first and second nodes on the right side) will be the control plane nodes.

It's common, in small home Kubernetes clusters, to remove the control plane taint (node-role.kubernetes.io/control-plane) to allow miscellaneous pods to be scheduled on the control plane nodes. I won't be doing that here; six worker nodes should be sufficient for my purposes, and I'll try (where possible and practical) to follow best practices. That said, I might find some random fun things to run on my control plane nodes, and I'll adjust their tolerations accordingly.

Workers

The remaining nodes (Cargyll, Dalt, and Erenford on the left, and Harlton, Inchfield, and Jast on the right) are dedicated workers. What sort of workloads will they run?

Well, probably nothing interesting. Not Plex, not torrent clients or *darrs. Mostly logging, metrics, and similar. I'll probably end up gathering a lot of data about data. And that's fine – these Raspberry Pis are running off SD cards; I don't really want them to be doing anything interesting anyway.

Network Topology

In case you don't quite have a picture of the infrastructure so far, it should look like this:

Frequently Asked Questions

Why didn't you make Etcd high-availability?

It seems like I'd need that cluster to have a quorum too, so we're talking about three nodes for the control plane, three nodes for Etcd, one for the load balancer, and, uh, three worker nodes. That's a bit more than I'd like to invest, and I'd like to avoid doubling up anywhere (although I'll probably add additional functionality to the load balancer). I'm interested in the etcd side of things, but not really enough to compromise elsewhere. I could be missing something obvious, though; if so, please let me know.

Why didn't you just do A=load balancer, B-D=control plane, and E-J=workers?

I could've and should've and still might. But because I'm a bit of a fool and wasn't really paying attention, I put A-E on the left and F-J on the right, rather than A,C,E,G,I on the left and B,D,F,H,J on the right, which would've been a bit cleaner. As it is, I need to think a second about which nodes are control nodes, since they aren't in a strict alphabetical order.

I might adjust this in the future; it should be easy to do so, after all, I just don't particularly want to take the cluster apart and rebuild it, especially since the standoffs were kind of messy as a consequence of the heatsinks.

Load Balancer

This cluster should have a high-availability control plane, and we can start laying the groundwork for that immediately.

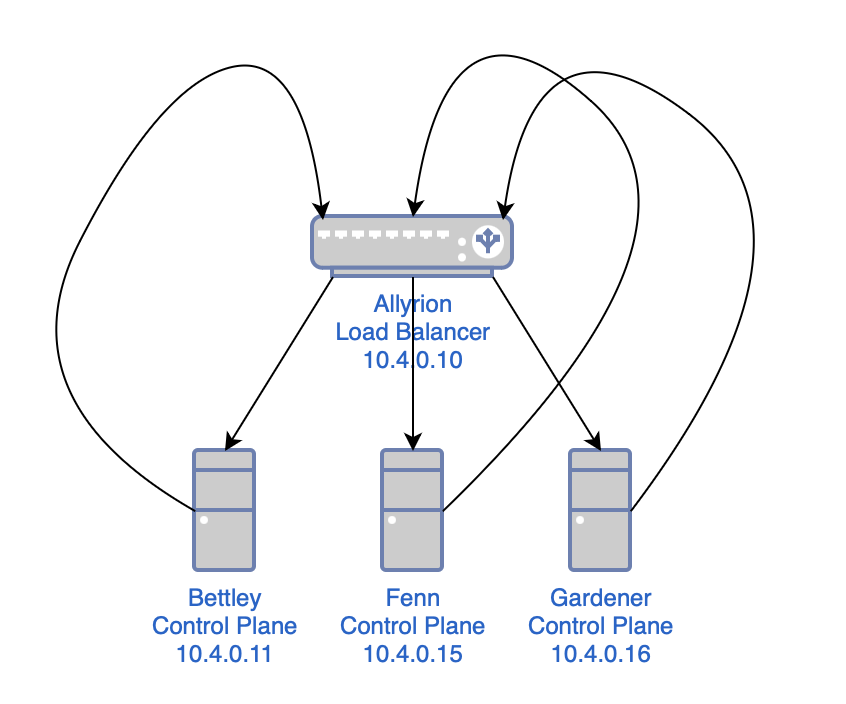

This might sound complex, but all we're doing is:

- creating a load balancer

- configuring the load balancer to use all of the control plane nodes as a list of backends

- telling anything that sends requests to a control plane node to send them to the load balancer instead

As mentioned before, we're using HAProxy as a load balancer. First, though, I'll install rsyslog, a log processing system. It will gather logs from HAProxy and deposit them in a more ergonomic location.

$ sudo apt install -y rsyslog

At least at the time of writing (February 2024), rsyslog on Raspberry Pi OS includes a bit of configuration that relocates HAProxy logs:

# /etc/rsyslog.d/49-haproxy.conf

# Create an additional socket in haproxy's chroot in order to allow logging via

# /dev/log to chroot'ed HAProxy processes

$AddUnixListenSocket /var/lib/haproxy/dev/log

# Send HAProxy messages to a dedicated logfile

:programname, startswith, "haproxy" {

/var/log/haproxy.log

stop

}

In Raspberry Pi OS, installing and configuring HAProxy is a simple matter.

$ sudo apt install -y haproxy

Here is the configuration I'm working with for HAProxy at the time of writing (February 2024); I've done my best to comment it thoroughly. You can also see the Jinja2 template and the role that deploys the template to configure HAProxy.

# /etc/haproxy/haproxy.cfg

# This is the HAProxy configuration file for the load balancer in my Kubernetes

# cluster. It is used to load balance the API server traffic between the

# control plane nodes.

# Global parameters

global

# Sets uid for haproxy process.

user haproxy

# Sets gid for haproxy process.

group haproxy

# Sets the maximum per-process number of concurrent connections.

maxconn 4096

# Configure logging.

log /dev/log local0

log /dev/log local1 notice

# Default parameters

defaults

# Use global log configuration.

log global

# Frontend configuration for the HAProxy stats page.

frontend stats-frontend

# Listen on all IPv4 addresses on port 8404.

bind *:8404

# Use HTTP mode.

mode http

# Enable the stats page.

stats enable

# Set the URI to access the stats page.

stats uri /stats

# Set the refresh rate of the stats page.

stats refresh 10s

# Set the realm to access the stats page.

stats realm HAProxy\ Statistics

# Set the username and password to access the stats page.

stats auth nathan:<redacted>

# Hide HAProxy version to improve security.

stats hide-version

# Kubernetes API server frontend configuration.

frontend k8s-api-server

# Listen on the IPv4 address of the load balancer on port 6443.

bind 10.4.0.10:6443

# Use TCP mode, which means that the connection will be passed to the server

# without TLS termination, etc.

mode tcp

# Enable logging of the client's IP address and port.

option tcplog

# Use the Kubernetes API server backend.

default_backend k8s-api-server

# Kubernetes API server backend configuration.

backend k8s-api-server

# Use TCP mode, not HTTPS.

mode tcp

# Sets the maximum time to wait for a connection attempt to a server to

# succeed.

timeout connect 10s

# Sets the maximum inactivity time on the client side. I might reduce this at

# some point.

timeout client 86400s

# Sets the maximum inactivity time on the server side. I might reduce this at

# some point.

timeout server 86400s

# Sets the load balancing algorithm.

# `roundrobin` means that each server is used in turns, according to their

# weights.

balance roundrobin

# Enable health checks.

option tcp-check

# For each control plane node, add a server line with the node's hostname and

# IP address.

# The `check` parameter enables health checks.

# The `fall` parameter sets the number of consecutive health check failures

# after which the server is considered to be down.

# The `rise` parameter sets the number of consecutive health check successes

# after which the server is considered to be up.

server bettley 10.4.0.11:6443 check fall 3 rise 2

server fenn 10.4.0.15:6443 check fall 3 rise 2

server gardener 10.4.0.16:6443 check fall 3 rise 2

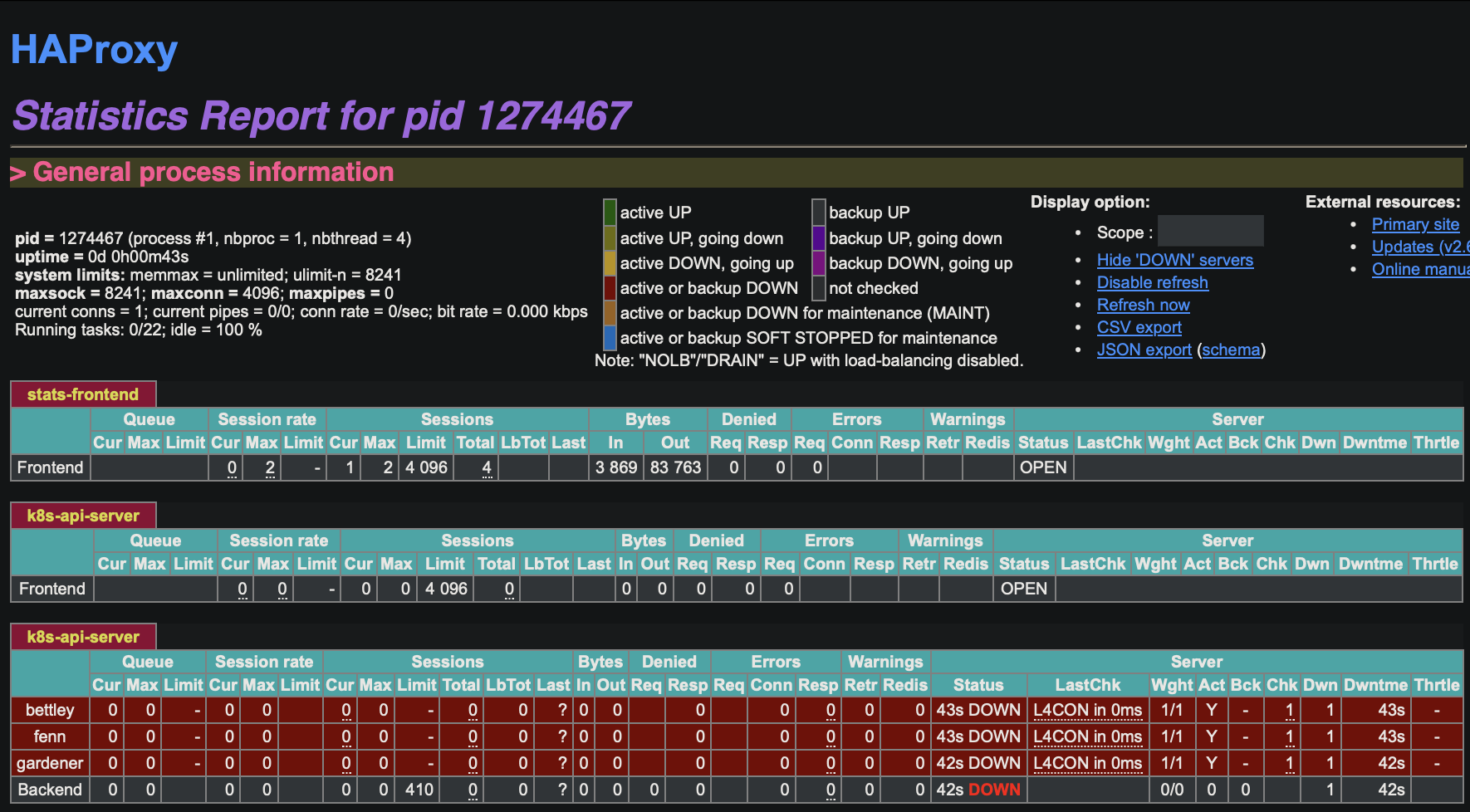

This enables the HAProxy stats frontend, which allows us to gain some insight into the operation of the frontend in something like realtime.

We see that our backends are unavailable, which is of course expected at this time. We can also read the logs, in /var/log/haproxy.log:

$ cat /var/log/haproxy.log

2024-02-21T07:03:16.603651-05:00 allyrion haproxy[1305383]: [NOTICE] (1305383) : haproxy version is 2.6.12-1+deb12u1

2024-02-21T07:03:16.603906-05:00 allyrion haproxy[1305383]: [NOTICE] (1305383) : path to executable is /usr/sbin/haproxy

2024-02-21T07:03:16.604085-05:00 allyrion haproxy[1305383]: [WARNING] (1305383) : Exiting Master process...

2024-02-21T07:03:16.607180-05:00 allyrion haproxy[1305383]: [ALERT] (1305383) : Current worker (1305385) exited with code 143 (Terminated)

2024-02-21T07:03:16.607558-05:00 allyrion haproxy[1305383]: [WARNING] (1305383) : All workers exited. Exiting... (0)

2024-02-21T07:03:16.771133-05:00 allyrion haproxy[1305569]: [NOTICE] (1305569) : New worker (1305572) forked

2024-02-21T07:03:16.772082-05:00 allyrion haproxy[1305569]: [NOTICE] (1305569) : Loading success.

2024-02-21T07:03:16.775819-05:00 allyrion haproxy[1305572]: [WARNING] (1305572) : Server k8s-api-server/bettley is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 2 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:16.776309-05:00 allyrion haproxy[1305572]: Server k8s-api-server/bettley is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 2 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:16.776584-05:00 allyrion haproxy[1305572]: Server k8s-api-server/bettley is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 2 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:17.423831-05:00 allyrion haproxy[1305572]: [WARNING] (1305572) : Server k8s-api-server/fenn is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 1 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:17.424229-05:00 allyrion haproxy[1305572]: Server k8s-api-server/fenn is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 1 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:17.424446-05:00 allyrion haproxy[1305572]: Server k8s-api-server/fenn is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 1 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:17.653803-05:00 allyrion haproxy[1305572]: Connect from 10.0.2.162:53155 to 10.4.0.10:8404 (stats-frontend/HTTP)

2024-02-21T07:03:17.677482-05:00 allyrion haproxy[1305572]: Connect from 10.0.2.162:53156 to 10.4.0.10:8404 (stats-frontend/HTTP)

2024-02-21T07:03:18.114561-05:00 allyrion haproxy[1305572]: [WARNING] (1305572) : Server k8s-api-server/gardener is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 0 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:18.115141-05:00 allyrion haproxy[1305572]: [ALERT] (1305572) : backend 'k8s-api-server' has no server available!

2024-02-21T07:03:18.115560-05:00 allyrion haproxy[1305572]: Server k8s-api-server/gardener is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 0 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:18.116133-05:00 allyrion haproxy[1305572]: Server k8s-api-server/gardener is DOWN, reason: Layer4 connection problem, info: "Connection refused at initial connection step of tcp-check", check duration: 0ms. 0 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

2024-02-21T07:03:18.117560-05:00 allyrion haproxy[1305572]: backend k8s-api-server has no server available!

2024-02-21T07:03:18.118458-05:00 allyrion haproxy[1305572]: backend k8s-api-server has no server available!

This is fine and dandy, and will be addressed in future chapters.

Container Runtime

Kubernetes is a container orchestration platform and therefore requires some container runtime to be installed.

This is a simple step; containerd is well-supported, well-regarded, and I don't have any reason not to use it.

I used Jeff Geerling's Ansible role to install and configure containerd on my cluster; this is really the point at which some kind of IaC/configuration management system becomes something more than a polite suggestion 🙂

That said, the actual steps are not very demanding (aside from the fact that they will need to be executed once on each Kubernetes host). They intersect largely with Docker Engine's installation instructions (since Docker, not the Containerd project, maintains the package repository), which I won't repeat here.

The container runtime installation is handled in my install_k8s_packages.yaml playbook, which is where we'll be spending some time in subsequent sections.

Networking

Kubernetes uses three different networks:

- Infrastructure: The physical or virtual backbone connecting the machines hosting the nodes. The infrastructure network enables connectivity between the nodes; this is essential for the Kubernetes control plane components (like the kube-apiserver, etcd, scheduler, and controller-manager) and the worker nodes to communicate with each other. Although pods communicate with each other via the pod network (overlay network), the underlying infrastructure network supports this by facilitating the physical or virtual network paths between nodes.

- Service: This is a purely virtual and internal network. It allows services to communicate with each other and with Pods seamlessly. This network layer abstracts the actual network details from the services, providing a consistent and simplified interface for inter-service communication. When a Service is created, it is automatically assigned a unique IP address from the service network's address space. This IP address is stable for the lifetime of the Service, even if the Pods that make up the Service change. This stable IP address makes it easier to configure DNS or other service discovery mechanisms.

- Pod: This is a crucial component that allows for seamless communication between pods across the cluster, regardless of which node they are running on. This networking model is designed to ensure that each pod gets its own unique IP address, making it appear as though each pod is on a flat network where every pod can communicate with every other pod directly without NAT.

My infrastructure network is already up and running at 10.4.0.0/20. I'll configure my service network at 172.16.0.0/20 and my pod network at 192.168.0.0/16.

With this decided, we can move forward.

Configuring Packages

Rather than YOLOing binaries onto our nodes like heathens, we'll use Apt and Ansible.

I wrote the above line before a few hours or so of fighting with Apt, Ansible, the repository signing key, documentation on the greater internet, my emotions, etc.

The long and short of it is that apt-key add is deprecated in Debian and Ubuntu, and consequently ansible.builtin.apt_key should be deprecated, but cannot be at this time for backward compatibility with older versions of Debian and Ubuntu and other derivative distributions.

The reason for this deprecation, as I understand it, is that apt-key add adds a key to /etc/apt/trusted.gpg.d. Keys here can be used to sign any package, including a package downloaded from an official distro package repository. This weakens our defenses against supply-chain attacks.

The new recommendation is to add the key to /etc/apt/keyrings, where it will be used when appropriate but not, apparently, to sign for official distro package repositories.

A further complication is that the Kubernetes project has moved its package repositories a time or two and completely rewrote the repository structure.

As a result, if you Google™, you will find a number of ways of using Ansible or a shell command to configure the Kubernetes apt repository on Debian/Ubuntu/Raspberry Pi OS, but none of them are optimal.

The Desired End-State

Here are my expectations:

- use the new deb822 format, not the old sources.list format

- preserve idempotence

- don't point to deprecated package repositories

- actually work

Existing solutions failed at one or all of these.

For the record, what we're trying to create is:

- a file located at

/etc/apt/keyrings/kubernetes.asccontaining the Kubernetes package repository signing key - a file located at

/etc/apt/sources.list.d/kubernetes.sourcescontaining information about the Kubernetes package repository.

The latter should look something like the following:

X-Repolib-Name: kubernetes

Types: deb

URIs: https://pkgs.k8s.io/core:/stable:/v1.29/deb/

Suites: /

Architectures: arm64

Signed-By: /etc/apt/keyrings/kubernetes.asc

The Solution

After quite some time and effort and suffering, I arrived at a solution.

You can review the original task file for changes, but I'm embedding it here because it was weirdly a nightmare to arrive at a working solution.

I've edited this only to substitute strings for the variables that point to them, so it should be a working solution more-or-less out-of-the-box.

---

- name: 'Install packages needed to use the Kubernetes Apt repository.'

ansible.builtin.apt:

name:

- 'apt-transport-https'

- 'ca-certificates'

- 'curl'

- 'gnupg'

- 'python3-debian'

state: 'present'

- name: 'Add Kubernetes repository.'

ansible.builtin.deb822_repository:

name: 'kubernetes'

types:

- 'deb'

uris:

- "https://pkgs.k8s.io/core:/stable:/v1.29/deb/"

suites:

- '/'

architectures:

- 'arm64'

signed_by: "https://pkgs.k8s.io/core:/stable:/v1.29/deb/Release.key"

After this, you will of course need to update your Apt cache and install the three Kubernetes tools we'll use shortly: kubeadm, kubectl, and kubelet.

Installing Packages

Now that we have functional access to the Kubernetes Apt package repository, we can install some important Kubernetes tools:

kubeadmprovides a straightforward way to setup and configure a Kubernetes cluster (API server, Controller Manager, DNS, etc). Kubernetes the Hard Way basically does whatkubeadmdoes. I usekubeadmbecause my goal is to go not necessarily deeper, but farther.kubectlis a CLI tool for administering a Kubernetes cluster; you can deploy applications, inspect resources, view logs, etc. As I'm studying for my CKA, I want to usekubectlfor as much as possible.kubeletruns on each and every node in the cluster and ensures that pods are functioning as desired and takes steps to correct their behavior when it does not match the desired state.

Installing these tools is comparatively simple, just sudo apt-get install -y kubeadm kubectl kubelet, or as covered in the relevant role.

kubeadm init

kubeadm does a wonderful job of simplifying Kubernetes cluster bootstrapping (if you don't believe me, just read Kubernetes the Hard Way), but there's still a decent amount of work involved. Since we're creating a high-availability cluster, we need to do some magic to convey secrets between the control plane nodes, generate join tokens for the worker nodes, etc.

So, we will:

- run

kubeadmon the first control plane node - copy some data around

- run a different

kubeadmcommand to join the rest of the control plane nodes to the cluster - copy some more data around

- run a different

kubeadmcommand to join the worker nodes to the cluster

and then we're done!

kubeadm init takes a number of command-line arguments.

You can look at the actual Ansible tasks bootstrapping my cluster, but this is what my command evaluates out to:

kubeadm init \

--control-plane-endpoint="10.4.0.10:6443" \

--kubernetes-version="stable-1.29" \

--service-cidr="172.16.0.0/20" \

--pod-network-cidr="192.168.0.0/16" \

--cert-dir="/etc/kubernetes/pki" \

--cri-socket="unix:///var/run/containerd/containerd.sock" \

--upload-certs

I'll break that down line by line:

# Run through all of the phases of initializing a Kubernetes control plane.

kubeadm init \

# Requests should target the load balancer, not this particular node.

--control-plane-endpoint="10.4.0.10:6443" \

# We don't need any more instability than we already have.

# At time of writing, 1.29 is the current release.

--kubernetes-version="stable-1.29" \

# As described in the chapter on Networking, this is the CIDR from which

# service IP addresses will be allocated.

# This gives us 4,094 IP addresses to work with.

--service-cidr="172.16.0.0/20" \

# As described in the chapter on Networking, this is the CIDR from which

# pod IP addresses will be allocated.

# This gives us 65,534 IP addresses to work with.

--pod-network-cidr="192.168.0.0/16"

# This is the directory that will host TLS certificates, keys, etc for

# cluster communication.

--cert-dir="/etc/kubernetes/pki"

# This is the URI of the container runtime interface socket, which allows

# direct interaction with the container runtime.

--cri-socket="unix:///var/run/containerd/containerd.sock"

# Upload certificates into the appropriate secrets, rather than making us

# do that manually.

--upload-certs

Oh, you thought I was just going to blow right by this, didncha? No, this ain't Kubernetes the Hard Way, but I do want to make an effort to understand what's going on here. So here, courtesy of kubeadm init --help, is the list of phases that kubeadm runs through by default.

preflight Run pre-flight checks

certs Certificate generation

/ca Generate the self-signed Kubernetes CA to provision identities for other Kubernetes components

/apiserver Generate the certificate for serving the Kubernetes API

/apiserver-kubelet-client Generate the certificate for the API server to connect to kubelet

/front-proxy-ca Generate the self-signed CA to provision identities for front proxy

/front-proxy-client Generate the certificate for the front proxy client

/etcd-ca Generate the self-signed CA to provision identities for etcd

/etcd-server Generate the certificate for serving etcd

/etcd-peer Generate the certificate for etcd nodes to communicate with each other

/etcd-healthcheck-client Generate the certificate for liveness probes to healthcheck etcd

/apiserver-etcd-client Generate the certificate the apiserver uses to access etcd

/sa Generate a private key for signing service account tokens along with its public key

kubeconfig Generate all kubeconfig files necessary to establish the control plane and the admin kubeconfig file

/admin Generate a kubeconfig file for the admin to use and for kubeadm itself

/super-admin Generate a kubeconfig file for the super-admin

/kubelet Generate a kubeconfig file for the kubelet to use *only* for cluster bootstrapping purposes

/controller-manager Generate a kubeconfig file for the controller manager to use

/scheduler Generate a kubeconfig file for the scheduler to use

etcd Generate static Pod manifest file for local etcd

/local Generate the static Pod manifest file for a local, single-node local etcd instance

control-plane Generate all static Pod manifest files necessary to establish the control plane

/apiserver Generates the kube-apiserver static Pod manifest

/controller-manager Generates the kube-controller-manager static Pod manifest

/scheduler Generates the kube-scheduler static Pod manifest

kubelet-start Write kubelet settings and (re)start the kubelet

upload-config Upload the kubeadm and kubelet configuration to a ConfigMap

/kubeadm Upload the kubeadm ClusterConfiguration to a ConfigMap

/kubelet Upload the kubelet component config to a ConfigMap

upload-certs Upload certificates to kubeadm-certs

mark-control-plane Mark a node as a control-plane

bootstrap-token Generates bootstrap tokens used to join a node to a cluster

kubelet-finalize Updates settings relevant to the kubelet after TLS bootstrap

/experimental-cert-rotation Enable kubelet client certificate rotation

addon Install required addons for passing conformance tests

/coredns Install the CoreDNS addon to a Kubernetes cluster

/kube-proxy Install the kube-proxy addon to a Kubernetes cluster

show-join-command Show the join command for control-plane and worker node

So now I will go through each of these in turn to explain how the cluster is created.

kubeadm init phases

preflight

The preflight phase performs a number of checks of the environment to ensure it is suitable. These aren't, as far as I can tell, documented anywhere -- perhaps because documentation would inevitably drift out of sync with the code rather quickly. And, besides, we're engineers and this is an open-source project; if we care that much, we can just read the source code!

But I'll go through and mention a few of these checks, just for the sake of discussion and because there are some important concepts.

- Networking: It checks that certain ports are available and firewall settings do not prevent communication.

- Container Runtime: It requires a container runtime, since... Kubernetes is a container orchestration platform.

- Swap: Historically, Kubernetes has balked at running on a system with swap enabled, for performance and stability reasons, but this has been lifted recently.

- Uniqueness: It checks that each hostname is different in order to prevent networking conflicts.

- Kernel Parameters: It checks for certain cgroups (see the Node configuration chapter for more information). It used to check for some networking parameters as well, to ensure traffic can flow properly, but it appears this might not be a thing anymore in 1.30.

certs

This phase generates important certificates for communication between cluster components.

/ca

This generates a self-signed certificate authority that will be used to provision identities for all of the other Kubernetes components, and lays the groundwork for the security and reliability of their communication by ensuring that all components are able to trust one another.

By generating its own root CA, a Kubernetes cluster can be self-sufficient in managing the lifecycle of the certificates it uses for TLS. This includes generating, distributing, rotating, and revoking certificates as needed. This autonomy simplifies the setup and ongoing management of the cluster, especially in environments where integrating with an external CA might be challenging.

It's worth mentioning that this includes client certificates as well as server certificates, since client certificates aren't currently as well-known and ubiquitous as server certificates. So just as the API server has a server certificate that allows clients making requests to verify its identity, so clients will have a client certificate that allows the server to verify their identity.

So these certificate relationships maintain CIA (Confidentiality, Integrity, and Authentication) by:

- encrypting the data transmitted between the client and the server (Confidentiality)

- preventing tampering with the data transmitted between the client and the server (Integrity)

- verifying the identity of the server and the client (Authentication)

/apiserver

The Kubernetes API server is the central management entity of the cluster. The Kubernetes API allows users and internal and external processes and components to communicate and report and manage the state of the cluster. The API server accepts, validates, and executes REST operations, and is the only cluster component that interacts with etcd directly. etcd is the source of truth within the cluster, so it is essential that communication with the API server be secure.

/apiserver-kubelet-client

This is a client certificate for the API server, ensuring that it can authenticate itself to each kubelet and prove that it is a legitimate source of commands and requests.

/front-proxy-ca and front-proxy-client

The Front Proxy certificates seem to only be used in situations where kube-proxy is supporting an extension API server, and the API server/aggregator needs to connect to an extension API server respectively. This is beyond the scope of this project.

/etcd-ca

etcd can be configured to run "stacked" (deployed onto the control plane) or as an external cluster. For various reasons (security via isolation, access control, simplified rotation and management, etc), etcd is provided its own certificate authority.

/etcd-server

This is a server certificate for each etcd node, assuring the Kubernetes API server and etcd peers of its identity.

/etcd-peer

This is a client and server certificate, distributed to each etcd node, that enables them to communicate securely with one another.

/etcd-healthcheck-client

This is a client certificate that enables the caller to probe etcd. It permits broader access, in that multiple clients can use it, but the degree of that access is very restricted.

/apiserver-etcd-client

This is a client certificate permitting the API server to communicate with etcd.

/sa

This is a public and private key pair that is used for signing service account tokens.

Service accounts are used to provide an identity for processes that run in a Pod, permitting them to interact securely with the API server.

Service account tokens are JWTs (JSON Web Tokens). When a Pod accesses the Kubernetes API, it can present a service account token as a bearer token in the HTTP Authorization header. The API server then uses the public key to verify the signature on the token, and can then evaluate whether the claims are valid, etc.

kubeconfig

These phases write the necessary configuration files to secure and facilitate communication within the cluster and between administrator tools (like kubectl) and the cluster.

/admin

This is the kubeconfig file for the cluster administrator. It provides the admin user with full access to the cluster.

Now, per a change in 1.29, as Rory McCune explains, this admin credential is no longer a member of system:masters and instead has access granted via RBAC. This means that access can be revoked without having to manually rotate all of the cluster certificates.

/super-admin

This new credential also provides full access to the cluster, but via the system:masters group mechanism (read: irrevocable without rotating certificates). This also explains why, when watching my cluster spin up while using the admin.conf credentials, a time or two I saw access denied errors!

/kubelet

This credential is for use with the kubelet during cluster bootstrapping. It provides a baseline cluster-wide configuration for all kubelets in the cluster. It points to the client certificates that allow the kubelet to communicate with the API server so we can propagate cluster-level configuration to each kubelet.

/controller-manager

This credential is used by the Controller Manager. The Controller Manager is responsible for running controller processes, which watch the state of the cluster through the API server and make changes attempting to move the current state towards the desired state. This file contains credentials that allow the Controller Manager to communicate securely with the API server.

/scheduler

This credential is used by the Kubernetes Scheduler. The Scheduler is responsible for assigning work, in the form of Pods, to different nodes in the cluster. It makes these decisions based on resource availability, workload requirements, and other policies. This file contains the credentials needed for the Scheduler to interact with the API server.

etcd

This phase generates the static pod manifest file for local etcd.

Static pod manifests are files kept in (in our case) /etc/kubernetes/manifests; the kubelet observes this directory and will start/replace/delete pods accordingly. In the case of a "stacked" cluster, where we have critical control plane components like etcd and the API server running within pods, we need some method of creating and managing pods without those components. Static pod manifests provide this capability.

/local

This phase configures a local etcd instance to run on the same node as the other control plane components. This is what we'll be doing; later, when we join additional nodes to the control plane, the etcd cluster will expand.

For instance, the static pod manifest file for etcd on bettley, my first control plane node, has a spec.containers[0].command that looks like this:

....

- command:

- etcd

- --advertise-client-urls=https://10.4.0.11:2379

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --client-cert-auth=true

- --data-dir=/var/lib/etcd

- --experimental-initial-corrupt-check=true

- --experimental-watch-progress-notify-interval=5s

- --initial-advertise-peer-urls=https://10.4.0.11:2380

- --initial-cluster=bettley=https://10.4.0.11:2380

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --listen-client-urls=https://127.0.0.1:2379,https://10.4.0.11:2379

- --listen-metrics-urls=http://127.0.0.1:2381

- --listen-peer-urls=https://10.4.0.11:2380

- --name=bettley

- --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

- --peer-client-cert-auth=true

- --peer-key-file=/etc/kubernetes/pki/etcd/peer.key

- --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- --snapshot-count=10000

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

....

whereas on fenn, the second control plane node, the corresponding static pod manifest file looks like this:

- command:

- etcd

- --advertise-client-urls=https://10.4.0.15:2379

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --client-cert-auth=true

- --data-dir=/var/lib/etcd

- --experimental-initial-corrupt-check=true

- --experimental-watch-progress-notify-interval=5s

- --initial-advertise-peer-urls=https://10.4.0.15:2380

- --initial-cluster=fenn=https://10.4.0.15:2380,gardener=https://10.4.0.16:2380,bettley=https://10.4.0.11:2380

- --initial-cluster-state=existing

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --listen-client-urls=https://127.0.0.1:2379,https://10.4.0.15:2379

- --listen-metrics-urls=http://127.0.0.1:2381

- --listen-peer-urls=https://10.4.0.15:2380

- --name=fenn

- --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt

- --peer-client-cert-auth=true

- --peer-key-file=/etc/kubernetes/pki/etcd/peer.key

- --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

- --snapshot-count=10000

- --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

and correspondingly, we can see three pods:

$ kubectl -n kube-system get pods

NAME READY STATUS RESTARTS AGE

etcd-bettley 1/1 Running 19 3h23m

etcd-fenn 1/1 Running 0 3h22m

etcd-gardener 1/1 Running 0 3h23m

control-plane

This phase generates the static pod manifest files for the other (non-etcd) control plane components.

/apiserver

This generates the static pod manifest file for the API server, which we've already discussed quite a bit.

/controller-manager

This generates the static pod manifest file for the controller manager. The controller manager embeds the core control loops shipped with Kubernetes. A controller is a loop that watches the shared state of the cluster through the API server and makes changes attempting to move the current state towards the desired state. Examples of controllers that are part of the Controller Manager include the Replication Controller, Endpoints Controller, Namespace Controller, and ServiceAccounts Controller.

/scheduler

This phase generates the static pod manifest file for the scheduler. The scheduler is responsible for allocating pods to nodes in the cluster based on various scheduling principles, including resource availability, constraints, affinities, etc.

kubelet-start

Throughout this process, the kubelet has been in a crash loop because it hasn't had a valid configuration.

This phase generates a config which (at least on my system) is stored at /var/lib/kubelet/config.yaml, as well as a "bootstrap" configuration that allows the kubelet to connect to the control plane (and retrieve credentials for longterm use).

Then the kubelet is restarted and will bootstrap with the control plane.

upload-certs

This phase enables the secure distribution of the certificates we created above, in the certs phases.

Some certificates need to be shared across the cluster (or at least across the control plane) for secure communication. This includes the certificates for the API server, etcd, the front proxy, etc.

kubeadm generates an encryption key that is used to encrypt the certificates, so they're not actually exposed in plain text at any point. Then the encrypted certificates are uploaded to etcd, a distributed key-value store that Kubernetes uses for persisting cluster state. To facilitate future joins of control plane nodes without having to manually distribute certificates, these encrypted certificates are stored in a specific kubeadm-certs secret.

The encryption key is required to decrypt the certificates for use by joining nodes. This key is not uploaded to the cluster for security reasons. Instead, it must be manually shared with any future control plane nodes that join the cluster. kubeadm outputs this key upon completion of the upload-certs phase, and it's the administrator's responsibility to securely transfer this key when adding new control plane nodes.

This process allows for the secure addition of new control plane nodes to the cluster by ensuring they have access to the necessary certificates to communicate securely with the rest of the cluster. Without this phase, administrators would have to manually copy certificates to each new node, which can be error-prone and insecure.

By automating the distribution of these certificates and utilizing encryption for their transfer, kubeadm significantly simplifies the process of scaling the cluster's control plane, while maintaining high standards of security.

mark-control-plane

In this phase, kubeadm applies a specific label to control plane nodes: node-role.kubernetes.io/control-plane=""; this marks the node as part of the control plane. Additionally, the node receives a taint, node-role.kubernetes.io/control-plane=:NoSchedule, which will prevent normal workloads from being scheduled on it.

As noted previously, I see no reason to remove this taint, although I'll probably enable some tolerations for certain workloads (monitoring, etc).

bootstrap-token

This phase creates bootstrap tokens, which are used to authenticate new nodes joining the cluster. This is how we are able to easily scale the cluster dynamically without copying multiple certificates and private keys around.

The "TLS bootstrap" process allows a kubelet to automatically request a certificate from the Kubernetes API server. This certificate is then used for secure communication within the cluster. The process involves the use of a bootstrap token and a Certificate Signing Request (CSR) that the kubelet generates. Once approved, the kubelet receives a certificate and key that it uses for authenticated communication with the API server.

Bootstrap tokens are a simple bearer token. This token is composed of two parts: an ID and a secret, formatted as <id>.<secret>. The ID and secret are randomly generated strings that authenticate the joining nodes to the cluster.

The generated token is configured with specific permissions using RBAC policies. These permissions typically allow the token to create a certificate signing request (CSR) that the Kubernetes control plane can then approve, granting the joining node the necessary certificates to communicate securely within the cluster.

By default, bootstrap tokens are set to expire after a certain period (24 hours by default), ensuring that tokens cannot be reused indefinitely for joining new nodes to the cluster. This behavior enhances the security posture of the cluster by limiting the window during which a token is valid.

Once generated and configured, the bootstrap token is stored as a secret in the kube-system namespace.

kubelet-finalize

This phase ensures that the kubelet is fully configured with the necessary settings to securely and effectively participate in the cluster. It involves applying any final kubelet configurations that might depend on the completion of the TLS bootstrap process.

addon

This phase sets up essential add-ons required for the cluster to meet the Kubernetes Conformance Tests.

/coredns

CoreDNS provides DNS services for the internal cluster network, allowing pods to find each other by name and services to load-balance across a set of pods.

/kube-proxy

kube-proxy is responsible for managing network communication inside the cluster, implementing part of the Kubernetes Service concept by maintaining network rules on nodes. These rules allow network communication to pods from network sessions inside or outside the cluster.

kube-proxy ensures that the networking aspect of Kubernetes services is correctly handled, allowing for the routing of traffic to the appropriate destinations. It operates in the user space, and it can also run in iptables mode, where it manipulates rules to allow network traffic. This allows services to be exposed to the external network, load balances traffic to pods across the multiple instances, etc.

show-join-command

This phase simplifies the process of expanding a Kubernetes cluster by generating bootstrap tokens and providing the necessary command to join additional nodes, whether they are worker nodes or additional control plane nodes.

In the next section, we'll actually bootstrap the cluster.

Bootstrapping the First Control Plane Node

With a solid idea of what it is that kubeadm init actually does, we can return to our command:

kubeadm init \

--control-plane-endpoint="10.4.0.10:6443" \

--kubernetes-version="stable-1.29" \

--service-cidr="172.16.0.0/20" \

--pod-network-cidr="192.168.0.0/16" \

--cert-dir="/etc/kubernetes/pki" \

--cri-socket="unix:///var/run/containerd/containerd.sock" \

--upload-certs

It's really pleasantly concise, given how much is going on under the hood.

The Ansible tasks also symlinks the /etc/kubernetes/admin.conf file to ~/.kube/config (so we can use kubectl without having to specify the config file).

Then it sets up my preferred Container Network Interface addon, Calico. I have in the past sometimes used Flannel, but Flannel doesn't support NetworkPolicy resources as it is a Layer 3 networking solution, whereas Calico operates at Layer 3 and Layer 4, which allows it fine-grained control over traffic based on ports, protocol types, sources and destinations, etc.

I want to play with NetworkPolicy resources, so Calico it is.

The next couple of steps create bootstrap tokens so we can join the cluster.

Joining the Rest of the Control Plane

The next phase of bootstrapping is to admit the rest of the control plane nodes to the control plane.

First, we create a JoinConfiguration manifest, which should look something like this (in Jinja2):

apiVersion: kubeadm.k8s.io/v1beta3

kind: JoinConfiguration

discovery:

bootstrapToken:

apiServerEndpoint: {{ load_balancer.ipv4_address }}:6443

token: {{ kubeadm_token }}

unsafeSkipCAVerification: true

timeout: 5m0s

tlsBootstrapToken: {{ kubeadm_token }}

controlPlane:

localAPIEndpoint:

advertiseAddress: {{ ipv4_address }}

bindPort: 6443

certificateKey: {{ k8s_certificate_key }}

nodeRegistration:

name: {{ inventory_hostname }}

criSocket: {{ k8s_cri_socket }}

{% if inventory_hostname in control_plane.rest.hostnames %}

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

{% else %}

taints: []

{% endif %}

I haven't bothered to substitute the values; none of them should be mysterious at this point.

After that, a simple kubeadm join --config /etc/kubernetes/kubeadm-controlplane.yaml on each node is sufficient to complete the control plane.

Admitting the Worker Nodes

Admitting the worker nodes to the cluster is simple; we just have the first control plane node create a token and print the join command (kubeadm token create --print-join-command) for each worker node, then execute it from that worker node.

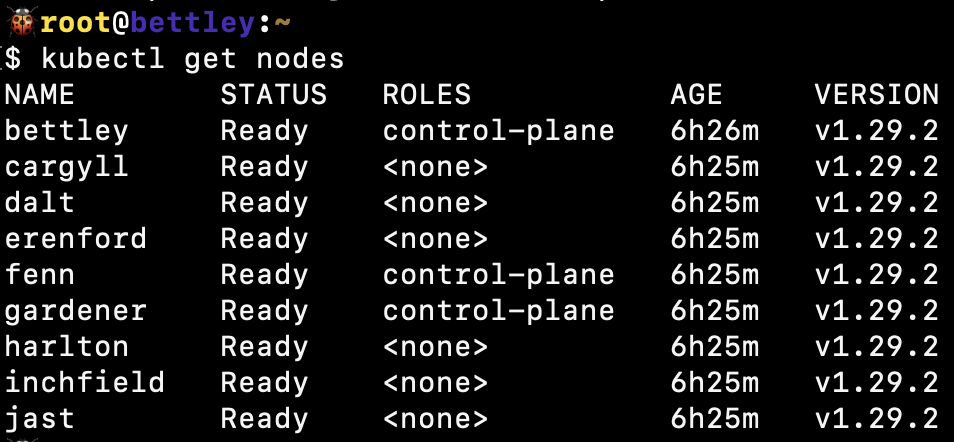

And voilà! We have a functioning cluster.

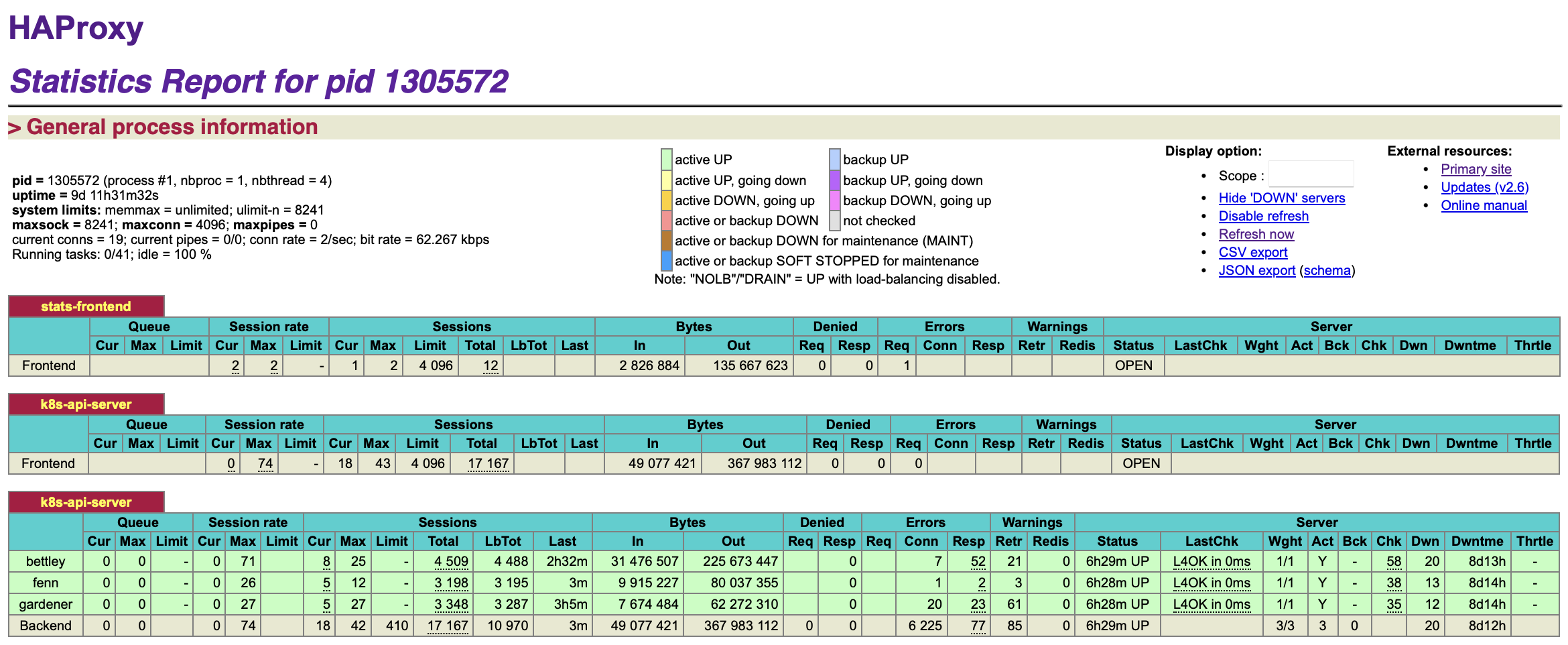

We can also see that the cluster is functioning well from HAProxy's perspective:

As a reminder, this is all persisted in the Ansible tasks.

Where Do We Go From Here?

We have a functioning cluster now, which is to say that I've spent many hours of my life that I'm not going to get back just doing the same thing that the official documentation manages to convey in just a few lines.

Or that Jeff Geerling's geerlingguy.kubernetes has already managed to do.

And it's not a tenth of a percent as much as Kubespray can do.

Not much to be proud of, but again, this is a personal learning journey. I'm just trying to build a cluster thoughtfully, limiting the black boxes and the magic as much as practical.

In the following sections, I'll add more functionality.

Installing Helm

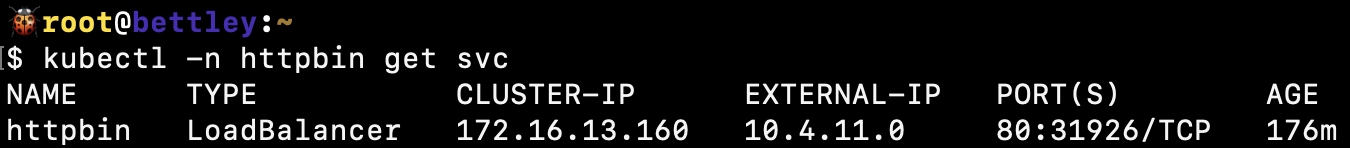

I have a lot of ambitions for this cluster, but after some deliberation, the thing I most want to do right now is deploy something to Kubernetes.

So I'll be starting out by installing Argo CD, and I'll do that... soon. In the next chapter. I decided to install Argo CD via Helm, since I expect that Helm will be useful in other situations as well, e.g. trying out applications before I commit (no pun intended) to bringing them into GitOps.

So I created a playbook and role to cover installing Helm.

Fortunately, this is fairly simple to install and trivial to configure, which is not something I can say for Argo CD 🙂

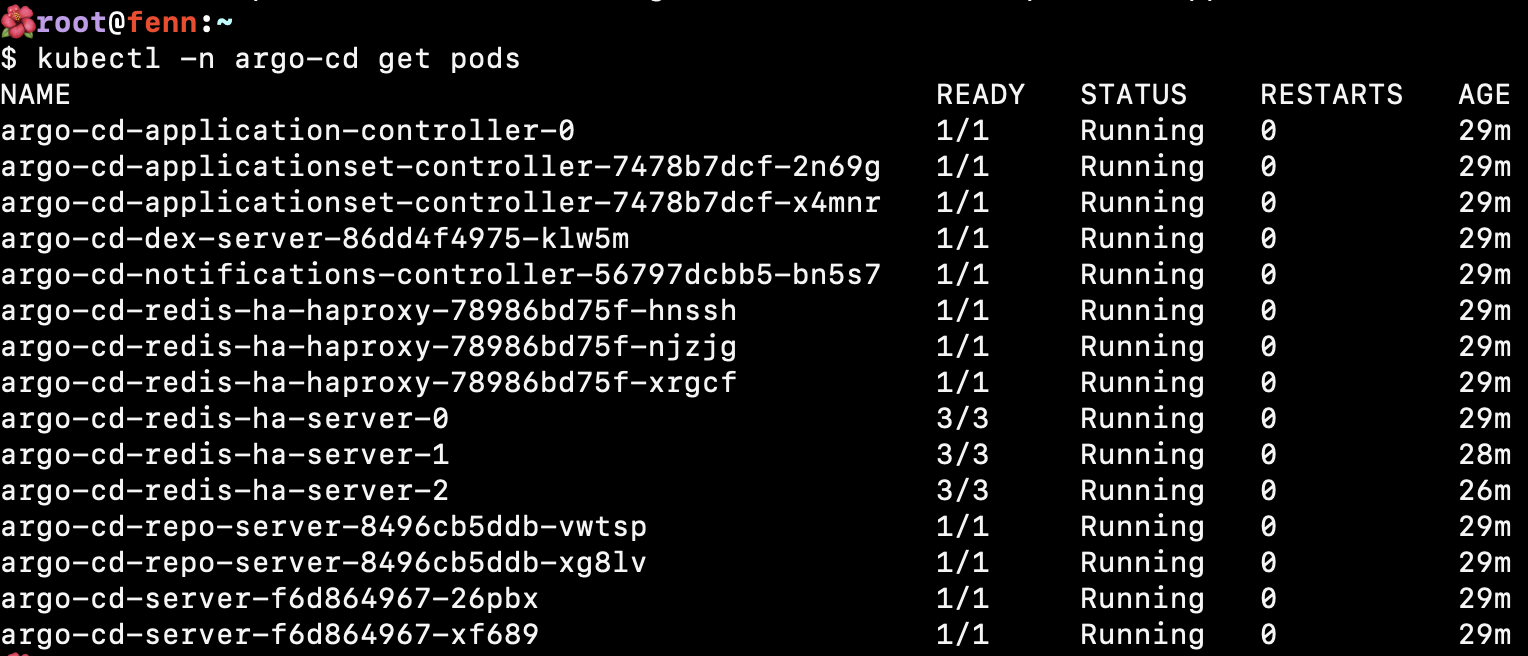

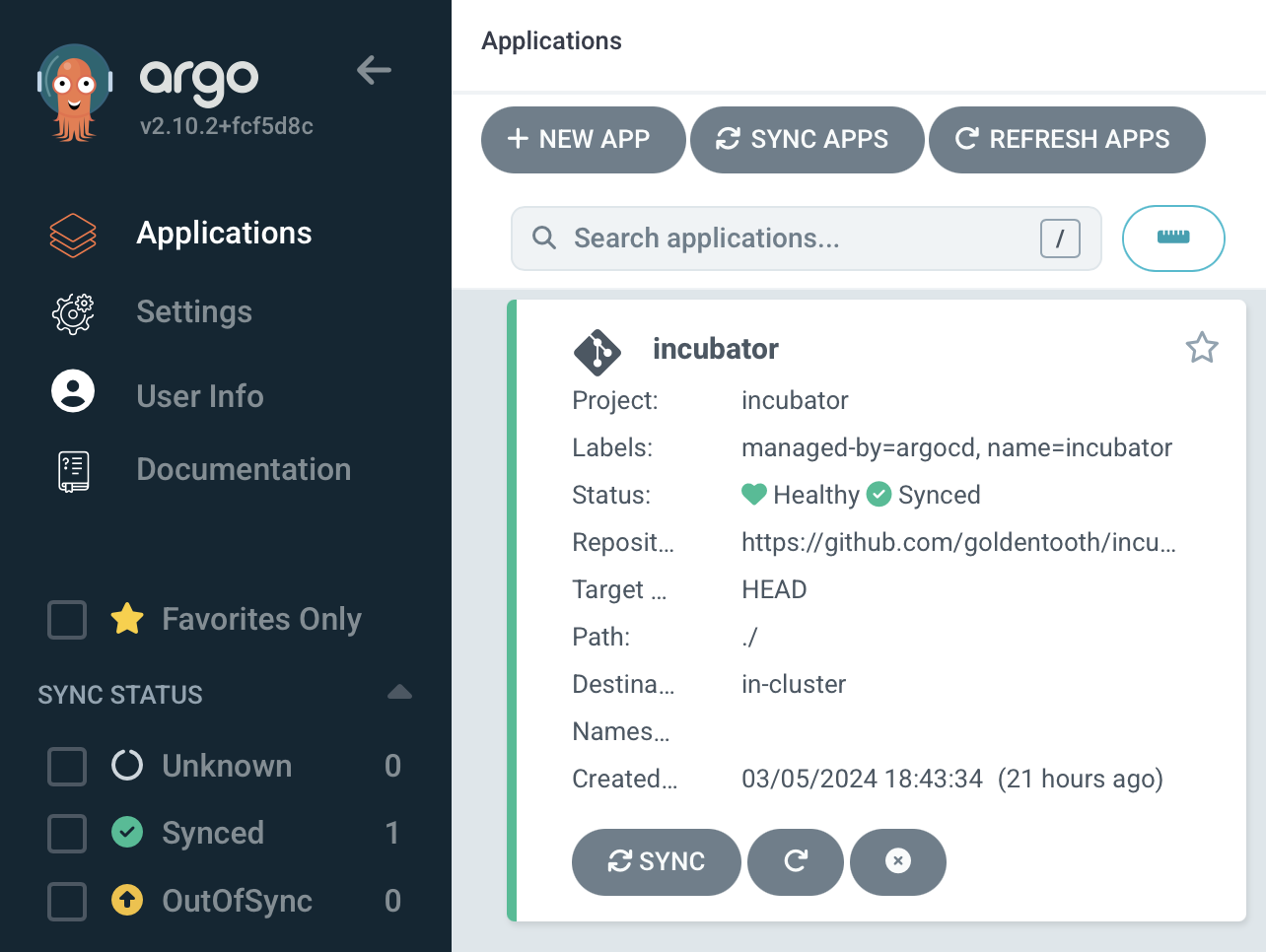

Installing Argo CD

GitOps is a methodology based around treating IaC stored in Git as a source of truth for the desired state of the infrastructure. Put simply, whatever you push to main becomes the desired state and your IaC systems, whether they be Terraform, Ansible, etc, will be invoked to bring the actual state into alignment.

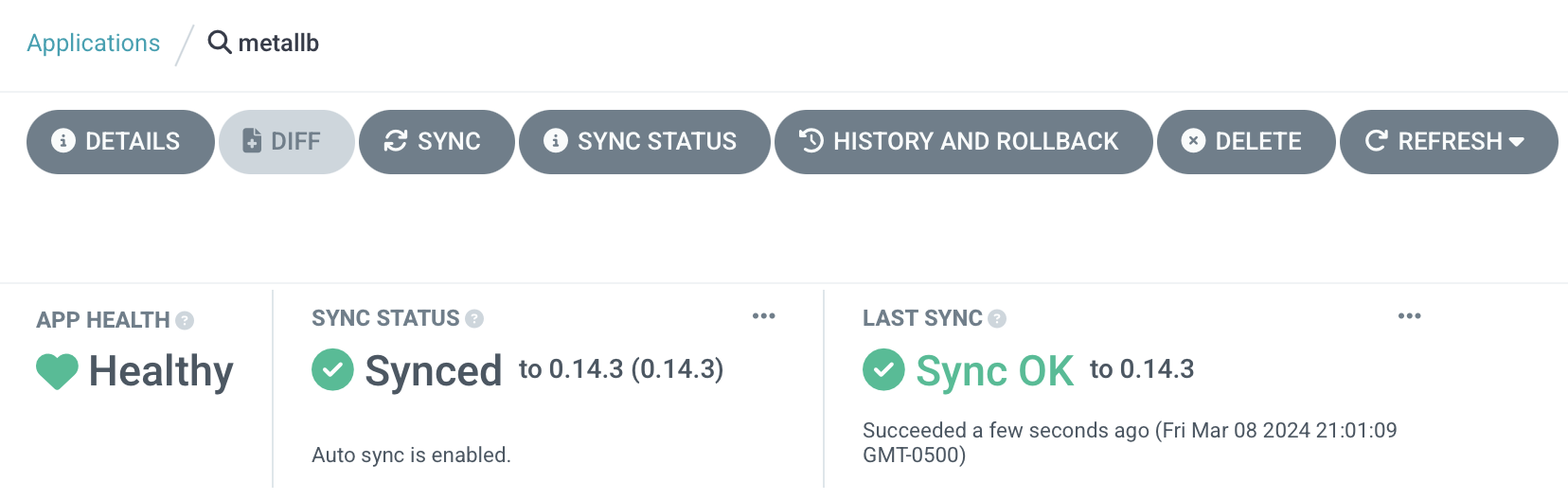

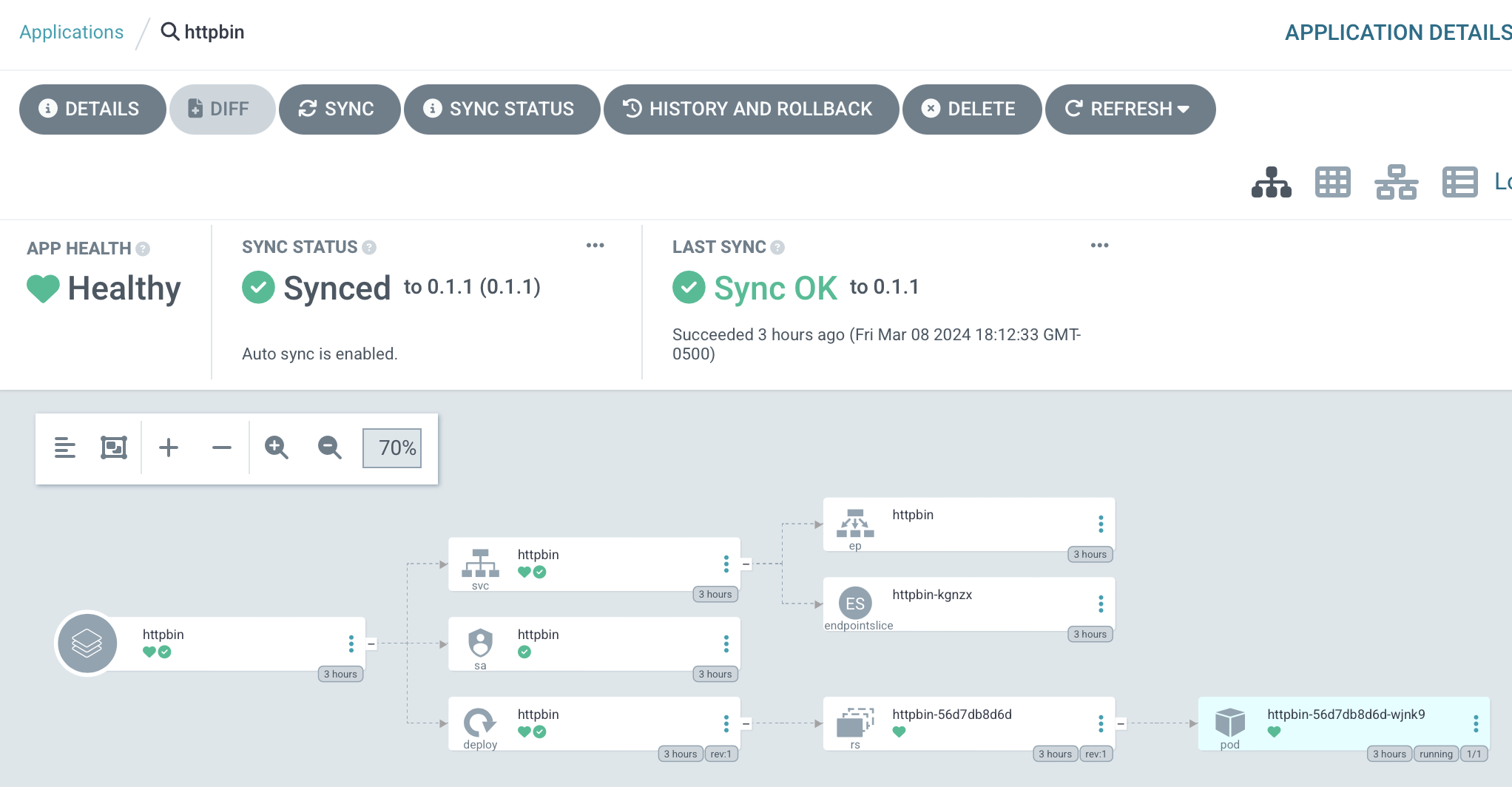

Argo CD is a popular system for implementing GitOps with Kubernetes. It can observe a Git repository for changes and react to those changes accordingly, creating/destroying/replacing resources as needed within the cluster.

Argo CD is a large, complicated application in its own right; its Helm chart is thousands of lines long. I'm not trying to learn it all right now, and fortunately, I have a fairly simple structure in mind.

I'll install Argo CD via a new Ansible playbook and role that use Helm, which we set up in the last section.

None of this is particularly complex, but I'll document some of my values overrides here:

# I've seen a mix of `argocd` and `argo-cd` scattered around. I preferred

# `argocd`, but I will shift to `argo-cd` where possible to improve

# consistency.

#

# EDIT: The `argocd` CLI tool appears to be broken and does not allow me to

# override the names of certain components when port forwarding.

# See https://github.com/argoproj/argo-cd/issues/16266 for details.

# As a result, I've gone through and reverted my changes to standardize as much

# as possible on `argocd`. FML.

nameOverride: 'argocd'

global:

# This evaluates to `argocd.goldentooth.hellholt.net`.

domain: "{{ argocd_domain }}"

# Add Prometheus scrape annotations to all metrics services. This can

# be used as an alternative to the ServiceMonitors.

addPrometheusAnnotations: true

# Default network policy rules used by all components.

networkPolicy:

# Create NetworkPolicy objects for all components; this is currently false

# but I think I'd like to create these later.

create: false

# Default deny all ingress traffic; I want to improve security, so I hope

# to enable this later.

defaultDenyIngress: false

configs:

secret:

createSecret: true

# Specify a password. I store an "easy" password, which is in my muscle

# memory, so I'll use that for right now.

argocdServerAdminPassword: "{{ vault.easy_password | password_hash('bcrypt') }}"

# Refer to the repositories that host our applications.

repositories:

# This is the main (and likely only) one.

gitops:

type: 'git'

name: 'gitops'

# This turns out to be https://github.com/goldentooth/gitops.git

url: "{{ argocd_app_repo_url }}"

redis-ha:

# Enable Redis high availability.

enabled: true

controller:

# The HA configuration keeps this at one, and I don't see a reason to change.

replicas: 1

server:

# Enable

autoscaling:

enabled: true

# This immediately scaled up to 3 replicas.

minReplicas: 2

# I'll make this more secure _soon_.

extraArgs:

- '--insecure'

# I don't have load balancing set up yet.

service:

type: 'ClusterIP'

repoServer:

autoscaling:

enabled: true

minReplicas: 2

applicationSet:

replicas: 2

After running kubectl -n argocd port-forward service/argocd-server 8081:443 --address 0.0.0.0 on one of my control plane nodes, I'm able to view the web interface, log in, but there's nothing interesting.

I'll try to improve this situation shortly.

The "Incubator" GitOps Application

Previously, we discussed GitOps and how Argo CD provides a platform for implementing GitOps for Kubernetes.

As mentioned, the general idea is to have some Git repository somewhere that defines an application. We create a corresponding resource in Argo CD to represent that application, and Argo CD will henceforth watch the repository and make changes to the running application as needed.

What does the repository actually include? Well, it might be a Helm chart, or a kustomization, or raw manifests, etc. Pretty much anything that could be done in Kubernetes.

Of course, setting this up involves some manual work; you need to actually create the application within Argo CD and, if you want it to hang around, you need to presumably commit that resource to some version control system somewhere. We of course want to be careful who has access to that repository, though, and we might not want engineers to have access to Argo CD itself. So suddenly there's a rather uncomfortable amount of work and coupling in all of this.

A common pattern in Argo CD is the "app-of-apps" pattern. This is simply an Argo CD application pointing to a repository that contains other Argo CD applications. Thus you can have a single application created for you by the principal platform engineer, and you can turn it into fifty or a hundred finely grained pieces of infrastructure that said principal engineer doesn't have to know about 🙂

(If they haven't configured the security settings carefully, it can all just be your little secret 😉)

Given that we're operating in a lab environment, we can use the "app-of-apps" approach for the Incubator, which is where we can try out new configurations. We can give it fairly unrestricted access while we work on getting things to deploy correctly, and then lock things down as we zero in on a stable configuration.

A (relatively) new construct in Argo CD is the ApplicationSet construct, which seeks to more clearly define how applications are created and fix the problems with the "app-of-apps" approach. That's the approach we will take in this cluster for mature applications.

But meanwhile, we'll create an AppProject manifest for the Incubator:

---

apiVersion: 'argoproj.io/v1alpha1'

kind: 'AppProject'

metadata:

name: 'incubator'

# Argo CD resources need to deploy into the Argo CD namespace.

namespace: 'argocd'

finalizers:

- 'resources-finalizer.argocd.argoproj.io'

spec:

description: 'GoldenTooth incubator project'

# Allow manifests to deploy from any Git repository.

# This is an acceptable security risk because this is a lab environment

# and I am the only user.

sourceRepos:

- '*'

destinations:

# Prevent any resources from deploying into the kube-system namespace.

- namespace: '!kube-system'

server: '*'

# Allow resources to deploy into any other namespace.

- namespace: '*'

server: '*'

clusterResourceWhitelist:

# Allow any cluster resources to deploy.

- group: '*'

kind: '*'

As mentioned before, this is very permissive. It only slightly differs from the default project by preventing resources from deploying into the kube-system namespace.

We'll also create an Application manifest:

apiVersion: 'argoproj.io/v1alpha1'

kind: 'Application'

metadata:

name: 'incubator'

namespace: 'argocd'

labels:

name: 'incubator'

managed-by: 'argocd'

spec:

project: 'incubator'

source:

repoURL: "https://github.com/goldentooth/incubator.git"

path: './'

targetRevision: 'HEAD'

destination:

server: 'https://kubernetes.default.svc'

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- Validate=true

- CreateNamespace=true

- PrunePropagationPolicy=foreground

- PruneLast=true

- RespectIgnoreDifferences=true

- ApplyOutOfSyncOnly=true

That's sufficient to get it to pop up in the Applications view in Argo CD.

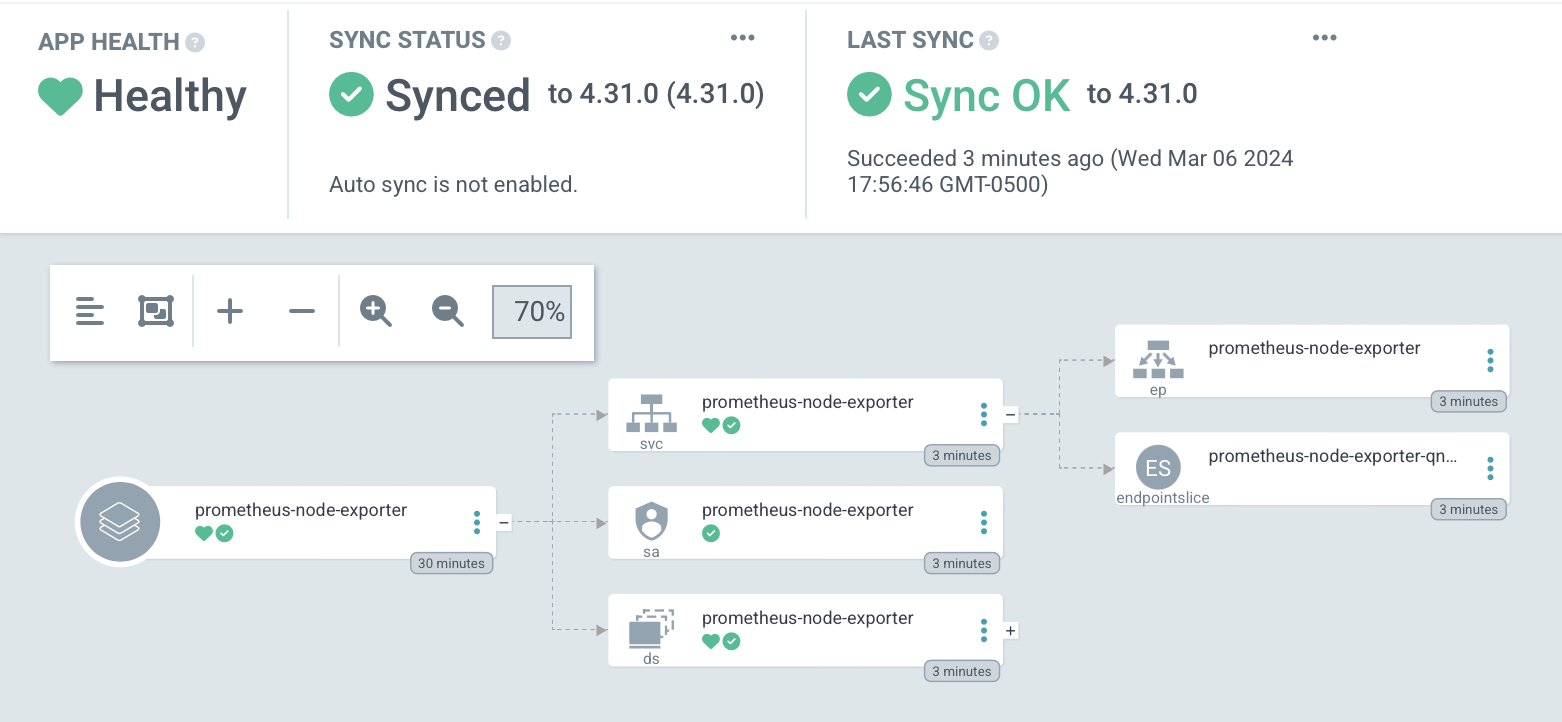

Prometheus Node Exporter

Sure, I could just jump straight into kube-prometheus, but where's the fun (and, more importantly, the learning) in that?

I'm going to try to build a system from the ground up, tweaking each component as I go.

Prometheus Node Exporter seems like a reasonable place to begin, as it will give me per-node statistics that I can look at immediately. Or almost immediately.

The first order of business is to modify our incubator repository to refer to the Prometheus Node Exporter Helm chart.

By adding the following in the incubator repo:

# templates/prometheus_node_exporter.yaml

apiVersion: v1

kind: Namespace

metadata:

name: prometheus-node-exporter

---

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: prometheus-node-exporter

namespace: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

destination:

namespace: prometheus-node-exporter

server: 'https://kubernetes.default.svc'

project: incubator

source:

repoURL: https://prometheus-community.github.io/helm-charts

chart: prometheus-node-exporter

targetRevision: 4.31.0

helm:

releaseName: prometheus-node-exporter

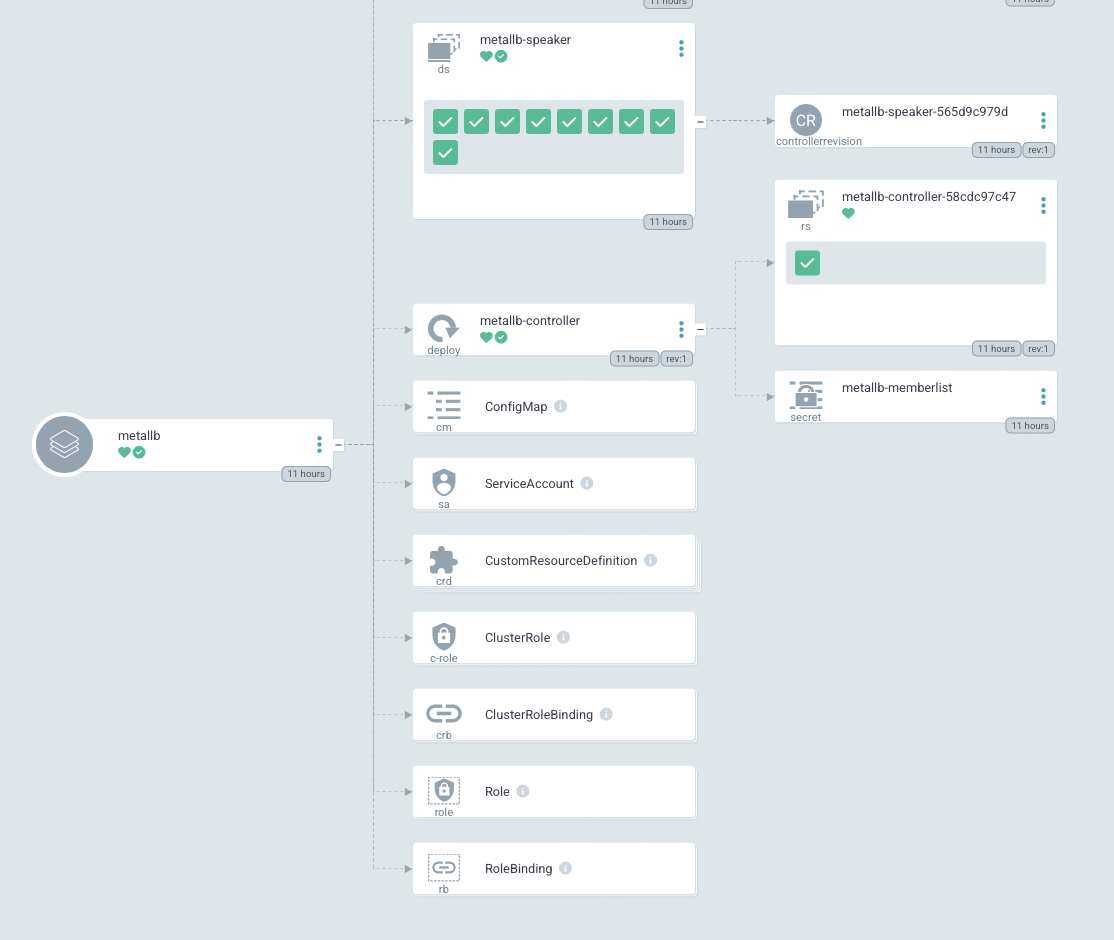

We'll soon see the resources created:

And we can curl a metric butt-ton of information:

$ curl localhost:9100/metrics

# HELP go_gc_duration_seconds A summary of the pause duration of garbage collection cycles.

# TYPE go_gc_duration_seconds summary

go_gc_duration_seconds{quantile="0"} 0

go_gc_duration_seconds{quantile="0.25"} 0

go_gc_duration_seconds{quantile="0.5"} 0

go_gc_duration_seconds{quantile="0.75"} 0

go_gc_duration_seconds{quantile="1"} 0

go_gc_duration_seconds_sum 0

go_gc_duration_seconds_count 0

# HELP go_goroutines Number of goroutines that currently exist.

# TYPE go_goroutines gauge

go_goroutines 7

# HELP go_info Information about the Go environment.

# TYPE go_info gauge

go_info{version="go1.21.4"} 1

# HELP go_memstats_alloc_bytes Number of bytes allocated and still in use.

# TYPE go_memstats_alloc_bytes gauge

go_memstats_alloc_bytes 829976

# HELP go_memstats_alloc_bytes_total Total number of bytes allocated, even if freed.

# TYPE go_memstats_alloc_bytes_total counter

go_memstats_alloc_bytes_total 829976

# HELP go_memstats_buck_hash_sys_bytes Number of bytes used by the profiling bucket hash table.

# TYPE go_memstats_buck_hash_sys_bytes gauge

go_memstats_buck_hash_sys_bytes 1.445756e+06

# HELP go_memstats_frees_total Total number of frees.

# TYPE go_memstats_frees_total counter

go_memstats_frees_total 704

# HELP go_memstats_gc_sys_bytes Number of bytes used for garbage collection system metadata.

# TYPE go_memstats_gc_sys_bytes gauge

go_memstats_gc_sys_bytes 2.909376e+06

# HELP go_memstats_heap_alloc_bytes Number of heap bytes allocated and still in use.

# TYPE go_memstats_heap_alloc_bytes gauge

go_memstats_heap_alloc_bytes 829976

# HELP go_memstats_heap_idle_bytes Number of heap bytes waiting to be used.

# TYPE go_memstats_heap_idle_bytes gauge

go_memstats_heap_idle_bytes 1.458176e+06

# HELP go_memstats_heap_inuse_bytes Number of heap bytes that are in use.

# TYPE go_memstats_heap_inuse_bytes gauge

go_memstats_heap_inuse_bytes 2.310144e+06

# HELP go_memstats_heap_objects Number of allocated objects.

# TYPE go_memstats_heap_objects gauge

go_memstats_heap_objects 8628

# HELP go_memstats_heap_released_bytes Number of heap bytes released to OS.

# TYPE go_memstats_heap_released_bytes gauge

go_memstats_heap_released_bytes 1.458176e+06

# HELP go_memstats_heap_sys_bytes Number of heap bytes obtained from system.

# TYPE go_memstats_heap_sys_bytes gauge

go_memstats_heap_sys_bytes 3.76832e+06

# HELP go_memstats_last_gc_time_seconds Number of seconds since 1970 of last garbage collection.

# TYPE go_memstats_last_gc_time_seconds gauge

go_memstats_last_gc_time_seconds 0

# HELP go_memstats_lookups_total Total number of pointer lookups.

# TYPE go_memstats_lookups_total counter

go_memstats_lookups_total 0

# HELP go_memstats_mallocs_total Total number of mallocs.

# TYPE go_memstats_mallocs_total counter

go_memstats_mallocs_total 9332

# HELP go_memstats_mcache_inuse_bytes Number of bytes in use by mcache structures.

# TYPE go_memstats_mcache_inuse_bytes gauge

go_memstats_mcache_inuse_bytes 1200

# HELP go_memstats_mcache_sys_bytes Number of bytes used for mcache structures obtained from system.

# TYPE go_memstats_mcache_sys_bytes gauge

go_memstats_mcache_sys_bytes 15600

# HELP go_memstats_mspan_inuse_bytes Number of bytes in use by mspan structures.

# TYPE go_memstats_mspan_inuse_bytes gauge

go_memstats_mspan_inuse_bytes 37968

# HELP go_memstats_mspan_sys_bytes Number of bytes used for mspan structures obtained from system.

# TYPE go_memstats_mspan_sys_bytes gauge

go_memstats_mspan_sys_bytes 48888

# HELP go_memstats_next_gc_bytes Number of heap bytes when next garbage collection will take place.